Introduction to Large Language Models

Lecture 7: Scaling data size, Data sources, Data Pre-processing pipeline, Data mixing

Mitesh M. Khapra, Arun Prakash A

AI4Bharat, Department of Computer Science and Engineering, IIT Madras

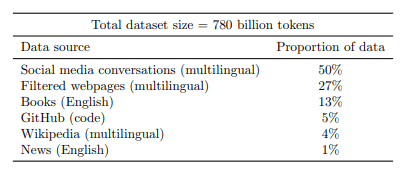

We know that the performance of DL models scales with data. The datasets that we have seen so far are listed in the table

| Model | Dataset | Size |

|---|---|---|

| GPT | Book Corpus | 0.8 Billion words |

| BERT | Book Corpus, English Wikipedia | 3.3 Billion Words |

| GPT-2 | WebText | ~19 Billion tokens |

| T5 | C4 | ~175 Billion tokens |

GPT-2 (1.5 Billion parameters) and T5 (10 Billion parameters) were trained on 19 Billion and 175 Billion tokens, respectively.

Is it necessary to scale the size of the dataset even further?

What are all the aspects (duplication, toxicity,bias) that we need to consider while building a larger dataset?

If yes, how do we source the data?

Module 1: Motivation for Scaling the data size

Mitesh M. Khapra, Arun Prakash A

AI4Bharat, Department of Computer Science and Engineering, IIT Madras

Scaling Law: The more the data, better the model

The other factor that motivated scaling the data size was the study on Scaling Laws

(published a few months after T5)

Given the number of non-embedding parameters \(N\) and the size of the dataset \(D\) (in tokens), the test loss

where,

\(N_c = 8.8 \times 10^{13}\) : 88 Trillion parameters

\(D_c=5.4 \times 10^{13}\) : 54 Trillion tokens

\(\alpha_N = 0.076 \)

\(\alpha_D = 0.095 \)

The study on T5 demonstrated that the model overfits if the data is repeated for many epochs during training. This suggests that we should scale the data size

Suppose we have a model with 100 million (non-embedding) parameters

and a dataset with 10 million tokens

What will be the training loss

Of course, the model will drive the training loss to zero!

However, the test loss changes according to the scaling law

What happens if we increase the size of the dataset? Let's see

What happens if we increase the size of the dataset? Let's see

Click on the slider to increase the value of D by 10 million tokens (or use right/left arrows in the keyboard)

Test lossIndex

Increasing the number of tokens reduces the model's test loss

This motivated the community to scale both the model and data size [Ref]

Fruit Fly

Honey Bee

Mouse

Cat

Brain

# Synapses

Transformer

GPT-2

Megatron LM

GPT-3

GShard

Scaling Parameters

of the model requires?

BookCorpus

Wikipedia

WebText (Closed)

RealNews

C4

Scaling Dataset

proportionately

Scaling Parameters

of the model requires..

While data has been growing......

we show that it is possible to achieve state-of-the-art performance by training exclusively on publicly available data

we propose to explore how web data can be better processed to significantly improve its quality, resulting in models as capable, if not more capable, than models trained on curated corpora

Open source data is good enough

Data Quality Is super important

Emphasizing diversity

we explore the improvement that can be obtained along a different axis: the quality of the data.

BookCorpus

Wikipedia

WebText(closed)

RealNews

The Pile

ROOTS

Scaling Dataset

proportionately

Scaling Parameters

of the model requires..

Falcon

RedPajama

DOLMA

C4

Scale

Quality

Diversity

Three axes of Data

The Pipeline

Sourcing Data

(Webpages, Code, Arxiv,..)

Cleaning Data

(deduplication, quality filtering,..)

Datasets:

C4, mC4, Stack, The Pile, DOLMA, WebText, MassiveWeb (not public), ..

LLM

Greater than 100 petabytes (PB)

100 TB

1 to 11 TB

1 to 11 TB

1 to 3 T tokens

Module 2: Data Sourcing and Cleaning Pipeline

Mitesh M. Khapra, Arun Prakash

AI4Bharat, Department of Computer Science and Engineering, IIT Madras

Disclaimer

Detailed information about the data-cleaning pipeline is available publicly for the following datasets

-

C4 from T5 (2019)

-

The Pile (2020)

-

Falcon (2023)

-

ROOTS (2023)

-

Redpajama-v1/v2 (2023)

-

DOLMA(2024)

-

Sangraha (2024)

Often, datasets used in pre-training large models lack proper documentation. Technical reports typically only provide very basic information

For example, MassiveWeb used in training Gopher model uses a custom HTML scraper using Googles SafeSearch signal for filtering

In this lecture, we attempt to give you a comprehensive idea about various data processing pipelines.

Almost all the pre-training datasets were constructed by scraping/crawling the web

One can write a custom HTML scraper or use Common Crawl (CC) for fetching the data

Each month they release a snapshot of the web that contains about 3 to 5 billion web pages

the raw data contains duplicate, toxic, low-quality content and also personally identifiable information

Garbage in - Garbage out (GIGO)

Recall the saying

So it is important to clean the raw text

Well, the web contains more than 100 petabytes of data.

Let us understand the data format of the CC snapshots

Data Format

Therefore, it is a challenging task to build an automatic pipeline that takes in the raw text and outputs clean text

The raw CC data is available in three types

-

WARC (Web ARChive, 86 TiB)

-

WAT ( Web Archive Transformation, 21 TiB)

-

WET (WARC Encapsulated Text , Plain texts, 8.69TB). Informally, Web Extracted Text

- To know more

The pipeline takes in the raw data in any one of these formats and produces clean text

To build a large dataset, researchers often take CC snapshots of multiple months or years

It is also challenging to directly measure how clean the output text is.

Pre-processing pipeline

To get high quality text from the raw data, the pipeline requires mechanisms

to detect toxicity level (bad words, hate speech, stereotypes,..) of the content in the pages

to detect the language of the web pages

to detect quality of the content in the pages

to detect and remove Personally Identifiable Information (PII) in the pages

to deduplicate the content (line, paragraph and document level)

To get high quality text from the raw data, the pipelines require mechanisms

(Similarity measure using hashing/ml techniques )

(Simple heuristics or LM Model,Perspective AI)

(LM model trained with reference text like Wikipedia or books, Simple heuristics)

(Regex Patterns)

to detect toxicity level (bad words, hate speech, stereotypes,..) of the content in the pages

to detect the language of the web pages

to detect quality of the content in the pages

to detect and remove Personally Identifiable Information (PII) in the pages

to deduplicate the content

Pre-processing pipeline

Types of Deduplication

Exact duplication

The orbit of Aditya-L1 spacecraft is a periodic Halo orbit

The orbit of Aditya-L1 spacecraft is a periodic Halo orbit

webpage-1

webpage-2

Fuzzy (near) duplication

The orbit of Aditya-L1 spacecraft is a periodic Halo orbit which is located roughly 1.5 million km from earth on the continuously moving Sun

The orbit of Aditya-L1 spacecraft is a periodic Halo orbit . The orbit is located roughly 1.5 million km from earth on the continuously moving Sun

Exact deduplication Algorithm

Fuzzy deduplication Algorithm

Suffix array

-

MinHash

-

SimHash

-

Locality Sensitve Hash (LSH)

A Basic Pipeline (fastText)

Language Detection

hi

ta

en

Deduplication

(using hashing)

Deduplication

(using hashing)

Deduplication

(using hashing)

.WET

(8.6 TiB) compressedIt satisfies only two requirements

Uses line level deduplication

The filtered text could still contain toxic, low-quality and PII

CCNet Pipeline

Designed for extracting high-quality monolingual pre-training data

This is also helpful for low-resource languages as datasets like Bookcorpus and Wikipedia are only for English

Even if we wish to use Wikipedia dumps for other languages, the size won't be sufficient

For example, Hindi has only 1.2 million pages,Tamil has only 0.5 million pages where as English has 59 million pages [source]

Therefore, we need to use CC dumps (years of dumps)

This is a compute-intensive task and requires massive parallelization with carefully written code to execute them

CCNet Pipeline

Uses paragraph level deduplication

Divide each webpage into three portions: head, middle and tail . Obtain quality score for each portion using a pre-trained language model on a targeted domain (say, Wikipedia)

Please enable JavaScript to use our site.

Home

Products

Shipping

Contact

FAQ

Dried Lemons, $3.59/pound

Organic dried lemons from our farm in California.

Lemons are harvested and sun-dried for maximum flavor.

Good in soups and on popcorn.

The lemon, Citrus Limon (l.) Osbeck, is a species of small evergreen tree in the flowering plant family rutaceae. The tree's ellipsoidal yellow fruit is used for culinary and non-culinary purposes throughout the world, primarily for its juice, which has both culinary and cleaning uses. The juice of the lemon is about 5% to 6% citric acid, with a ph of around 2.2, giving it a sour taste

ML Classifier

ML Classifier

ML Classifier

quality score

quality score

quality score

CCNet Pipeline

Deduplication

(using hashing)

.WET

Uses paragraph level deduplication

Language Detection

Targeted Quality filtering

Divide each webpage into three portions: head, middle and tail . Obtain quality score for each portion using a pre-trained language model on a targeted domain (say, Wikipedia)

Remove low-quality pages based on a threshold

.JSON

It satisfies three requirements

By following this pipeline, they have extracted documents for 130 languages (that has at least 1000 documents)

CCNet Pipeline

Number of tokens (using the sentence piece tokenizer) in each language after deduplication (of Feb 2019 snapshot)

Recall, Tamil has 0.5 million Wikipedia pages, whereas by using CCNet pipeline on Feb 2019 snapshot of common crawl, one could get 0.9 million documents for the language (Note, there may be a overlap)

\(\approx\)550 Billion tokens

\(\approx 1.5\) Billion tokens

Colossal Clean Common Crawl (C4) Pipeline

It is an extension of CCNet with additional filtering heuristics.

.WET

CC

20 TB

En

C3

6 TB

C4

750 GB

RealNews like

35 GB

Web-Text like

17 GB

We can get diverse data such as news, stories, webtext by domain filtering

They released the clean dataset (named C4) in the public domain

Common Crawled "Web Extracted Text"

Menu

Lemon

Introduction

The lemon, Citrus Limon (l.) Osbeck, is a species of small evergreen tree in the flowering plant family rutaceae. The tree's ellipsoidal yellow fruit is used for culinary and non-culinary purposes throughout the world, primarily for its juice, which has both culinary and cleaning uses. The juice of the lemon is about 5% to 6% citric acid, with a ph of around 2.2, giving it a sour taste.

Article

The origin of the lemon is unknown, though lemons are thought to have first grown in Assam (a region in northeast India), northern Burma or China. A genomic study of the lemon indicated it was a hybrid between bitter orange (sour orange) and citron.

Please enable JavaScript to use our site.

Home

Products

Shipping

Contact

FAQ

Dried Lemons, $3.59/pound

Organic dried lemons from our farm in California.

Lemons are harvested and sun-dried for maximum flavor.

Good in soups and on popcorn.

The lemon, Citrus Limon (l.) Osbeck, is a species of small evergreen tree in the flowering plant family rutaceae. The tree's ellipsoidal yellow fruit is used for culinary and non-culinary purposes throughout the world, primarily for its juice, which has both culinary and cleaning uses. The juice of the lemon is about 5% to 6% citric acid, with a ph of around 2.2, giving it a sour taste

Lorem ipsum dolor sit amet, consectetur adipiscing elit.

Curabitur in tempus quam. In mollis et ante at consectetur.

Aliquam erat volutpat. Donec at lacinia est.

Duis semper, magna tempor interdum suscipit, ante elit molestie urna, eget efficitur risus nunc ac elit.

Fusce quis blandit lectus. Mauris at mauris a turpis tristique lacinia at nec ante.

Aenean in scelerisque tellus, a efficitur ipsum.

Integer justo enim, ornare vitae sem non, mollis fermentum lectus.

Mauris ultrices nisl at libero porta sodales in ac orci.

function Ball(r){

this.radius = r;

this.area = pi * r ** 2;

this.show = function()

{ drawCircle(r);

}

}

has a lot of gibberish

boiler-plate text like menus

offensive language, placeholder text, source code, etc.

Make clean corpus by filtering out low quality text that is suitable for NLP tasks

Let us illustrate the processes with an example

Menu

Lemon

Introduction

The lemon, Citrus Limon (l.) Osbeck, is a species of small evergreen tree in the flowering plant family rutaceae. The tree's ellipsoidal yellow fruit is used for culinary and non-culinary purposes throughout the world, primarily for its juice, which has both culinary and cleaning uses. The juice of the lemon is about 5% to 6% citric acid, with a ph of around 2.2, giving it a sour taste.

Article

The origin of the lemon is unknown, though lemons are thought to have first grown in Assam (a region in northeast India), northern Burma or China. A genomic study of the lemon indicated it was a hybrid between bitter orange (sour orange) and citron.

Please enable JavaScript to use our site.

Home

Products

Shipping

Contact

FAQ

Dried Lemons, $3.59/pound

Organic dried lemons from our farm in California.

Lemons are harvested and sun-dried for maximum flavor.

Good in soups and on popcorn.

The lemon, Citrus Limon (l.) Osbeck, is a species of small evergreen tree in the flowering plant family rutaceae. The tree's ellipsoidal yellow fruit is used for culinary and non-culinary purposes throughout the world, primarily for its juice, which has both culinary and cleaning uses. The juice of the lemon is about 5% to 6% citric acid, with a ph of around 2.2, giving it a sour taste

Lorem ipsum dolor sit amet, consectetur adipiscing elit.

Curabitur in tempus quam. In mollis et ante at consectetur.

Aliquam erat volutpat. Donec at lacinia est.

Duis semper, magna tempor interdum suscipit, ante elit molestie urna, eget efficitur risus nunc ac elit.

Fusce quis blandit lectus. Mauris at mauris a turpis tristique lacinia at nec ante.

Aenean in scelerisque tellus, a efficitur ipsum.

Integer justo enim, ornare vitae sem non, mollis fermentum lectus.

Mauris ultrices nisl at libero porta sodales in ac orci.

function Ball(r){

this.radius = r;

this.area = pi * r ** 2;

this.show = function()

{ drawCircle(r);

}

}

Remove any page that contains "lorem ipsum" or curly bracket

Menu

Lemon

Introduction

The lemon, Citrus Limon (l.) Osbeck, is a species of small evergreen tree in the flowering plant family rutaceae. The tree's ellipsoidal yellow fruit is used for culinary and non-culinary purposes throughout the world, primarily for its juice, which has both culinary and cleaning uses. The juice of the lemon is about 5% to 6% citric acid, with a ph of around 2.2, giving it a sour taste.

Article

The origin of the lemon is unknown, though lemons are thought to have first grown in Assam (a region in northeast India), northern Burma or China. A genomic study of the lemon indicated it was a hybrid between bitter orange (sour orange) and citron.

Please enable JavaScript to use our site

Home

Products

Shipping

Contact

FAQ

Dried Lemons, $3.59/pound

Organic dried lemons from our farm in California.

Lemons are harvested and sun-dried for maximum flavor.

Good in soups and on popcorn.

The lemon, Citrus Limon (l.) Osbeck, is a species of small evergreen tree in the flowering plant family rutaceae. The tree's ellipsoidal yellow fruit is used for culinary and non-culinary purposes throughout the world, primarily for its juice, which has both culinary and cleaning uses. The juice of the lemon is about 5% to 6% citric acid, with a ph of around 2.2, giving it a sour taste

Lorem ipsum dolor sit amet, consectetur adipiscing elit.

Curabitur in tempus quam. In mollis et ante at consectetur.

Aliquam erat volutpat. Donec at lacinia est.

Duis semper, magna tempor interdum suscipit, ante elit molestie urna, eget efficitur risus nunc ac elit.

Fusce quis blandit lectus. Mauris at mauris a turpis tristique lacinia at nec ante.

Aenean in scelerisque tellus, a efficitur ipsum.

Integer justo enim, ornare vitae sem non, mollis fermentum lectus.

Mauris ultrices nisl at libero porta sodales in ac orci.

function Ball(r){

this.radius = r;

this.area = pi * r ** 2;

this.show = function()

{ drawCircle(r);

}

}

Remove lines which do not have terminal punctuation marks

Remove any page that contains "lorem ipsum" or curly bracket

Menu

Lemon

Introduction

The lemon, Citrus Limon (l.) Osbeck, is a species of small evergreen tree in the flowering plant family rutaceae. The tree's ellipsoidal yellow fruit is used for culinary and non-culinary purposes throughout the world, primarily for its juice, which has both culinary and cleaning uses. The juice of the lemon is about 5% to 6% citric acid, with a ph of around 2.2, giving it a sour taste.

Article

The origin of the lemon is unknown, though lemons are thought to have first grown in Assam (a region in northeast India), northern Burma or China. A genomic study of the lemon indicated it was a hybrid between bitter orange (sour orange) and citron.

Please enable JavaScript to use our site

Home

Products

Shipping

Contact

FAQ

Dried Lemons, $3.59/pound

Organic dried lemons from our farm in California.

Lemons are harvested and sun-dried for maximum flavor.

Good in soups and on popcorn.

The lemon, Citrus Limon (l.) Osbeck, is a species of small evergreen tree in the flowering plant family rutaceae. The tree's ellipsoidal yellow fruit is used for culinary and non-culinary purposes throughout the world, primarily for its juice, which has both culinary and cleaning uses. The juice of the lemon is about 5% to 6% citric acid, with a ph of around 2.2, giving it a sour taste

Lorem ipsum dolor sit amet, consectetur adipiscing elit.

Curabitur in tempus quam. In mollis et ante at consectetur.

Aliquam erat volutpat. Donec at lacinia est.

Duis semper, magna tempor interdum suscipit, ante elit molestie urna, eget efficitur risus nunc ac elit.

Fusce quis blandit lectus. Mauris at mauris a turpis tristique lacinia at nec ante.

Aenean in scelerisque tellus, a efficitur ipsum.

Integer justo enim, ornare vitae sem non, mollis fermentum lectus.

Mauris ultrices nisl at libero porta sodales in ac orci.

function Ball(r){

this.radius = r;

this.area = pi * r ** 2;

this.show = function()

{ drawCircle(r);

}

}

Deduplicate the text

Remove lines which do not have terminal punctuation marks

Remove any page that contains "lorem ipsum" or curly bracket

Remove bad words by using a manual list of bad words (any page that contains a bad word will be removed)

In general, all pre-processing data pipelines follow CCNet pipeline with modifications in the components of the pipeline

For example, pile-CC uses the WARC files (instead of WET) which contain raw HTML pages then applies filtering (using jusText) to extract text content

jusText

(Extract text data)

.WARC

Language Detection

(Pycld2)

Deduplication and Targeted Quality filtering

It uses fuzzy deduplication over exact deduplication algorithm as in C4

Pile-CC

(227 GiB)

Significant duplicates in CC are exact duplicates [Ref]. Therefore, one can use fuzzy deduplication first followed by exact deduplication.

RefinedWeb (RW)

50% of CC was removed after language identification

.WARC

Trafilatura-LID

RW-Raw

RefinedWeb (RW)

50% of CC was removed after language identification

.WARC

Trafilatura-LID

Quality Filtering

RW-Raw

24% of RW-Raw was removed after Quality filtering

RW-Filtered

It used both fuzzy and exact deduplication techniques.

What is the impact of having duplicate content?

In total, this stringent approach removes almost 90% of data from a single snapshot of the common crawl

RefinedWeb (RW)

.WARC

Trafilatura-LID

Quality Filtering

Deduplication

(Fuzzy-Exact)

50% of CC was removed after language identification

24% of RW-Raw was removed after Quality filtering

12% of RW-filtered was removed after deduplication

RW-Raw

RW-Filtered

Here is a quick comparison of the pipelines we have seen so far

So far we have seen that the pre-processing pipeline takes in different forms of text data. Why not use Audio (using ASR), Scanned documents (using OCR)?

Using these modalities are helpful for low-resource languages

SETU Pipeline

Data Sources:

- Web (Curated and manually verified)

- Videos

- Digitized PDF

Language Detection:

- INDICLID, CLD3, NLLB

Scraping:

- Trafilatura

Deduplication:

- Fuzzy (MinHash)

Name of the dataset:

Sangraha Verified

Deduplication Deserves some attention!

The RefinedWeb Dataset for Falcon LLM:

the Pythia suite of models found that deduplicating The Pile had a limited impact on zero-shot performance, questioning whether deduplication is as relevant for curated corpora as it for predominantly web-based datasets.

Perfect Deduplication is Difficult

For example, it is found that a single sentence with 61 words was repeated 60000 times in C4 [Ref]

Not only in C4 but also in other datasets such as RealNews [Ref]

Therefore, the natural question is, Does duplicate content degrade the performance of the model?

Including the Pile: "We found the Pile was particularly full of duplicate documents, and advise future researchers using the Pile to perform additional de-duplication processing" [Ref]*

* however, the Pythia suite of models found that deduplicating The Pile had a limited impact on zero-shot performance

Why Deduplication is important?

Duplication

-

allows the model to memorize the training data

-

gives a wrong picture of test performance

-

susceptible to privacy risks

The graph on the right shows the number of (exact) duplicates of 400-character sequence.

We can see that millions of sequences were repeated at least 10 times in the dataset

For instance, a sequence that is present 10 times in the training data is on average generated 1000x more often than a sequence that is present only once [Ref]

for a 1B parameters model, a hundred duplicates are harmful; at 175B, even a few duplicates could have a disproportionate effect [Ref]

A Pretrainer’s Guide

A “high-quality” Reddit post does not look like a “high-quality“ academic paper; and even with academic papers, quality measured by peer review has high variance

All pipelines are leaky!

Insights from training Gopher:

We do not attempt to filter out low quality documents by training a classifier based on a “gold” set of text, such as English Wikipedia or pages linked from Reddit , as this could inadvertently bias towards a certain demographic or erase certain dialects or sociolects from representation

Open Pre-trained Transformer LM

We found the Pile was particularly full of duplicate documents, and advise future researchers using the Pile to perform additional de-duplication processing.

Learning from Repeated Data:

performance of an 800M parameter model can be degraded to that of a 2x smaller model (400M params) by repeating 0.1% of the data 100 times, despite the other 90% of the training tokens remaining unique

Module 3: Pre-training Datasets

Mitesh M. Khapra, Arun Prakash A

AI4Bharat, Department of Computer Science and Engineering, IIT Madras

Pre-training Datasets

Monolingual

Multilingual

We can also group based on the diversity of data sources (web,books,wiki,code..)

en

en,gu,hi,sa,ta,te..

For convenience, the source code of 300+ programming languages can be considered as monolingual (english) datasets

code

Some publically released pre-training datasets (for ex, BookCorpus) are no longer available

BookCorpus

Wikipedia

WebText

RealNews

C4, The Pile

ROOTS

Falcon

RedPajama

So researchers build their own versions (say, OpenWebText) by scraping the web by following similar steps in the original paper.

Some datasets are private, for example, webtext

BookCorpus

Wikipedia

OpenWebText

RealNews

C4

ROOTS

RefinedWeb (Falcon)

The Pile

DOLMA

mC4

Stack

Pre-training Datasets

Monolingual

Multi-lingual

Sangraha

Of these, we will be focusing on..

RedPajama-v2

RedPajama-v1

C4

ROOTS

RefinedWeb (Falcon)

RedPajama-v1/v2

The Pile

DOLMA

mC4

Stack

Sangraha

One way to compare the size of the datasets is to use the (uncompressed) storage memory

The other useful way is to compare the size of the datasets in number of tokens (related to scaling law)

Datasets such as The Pile, and DOLMA emphasize diversity in the pre-training data.

Datasets such as RefinedWeb suggest that one could get good performance with web only data

C4

ROOTS

DOLMA

mC4

Sangraha

Dataset Name

# of tokens

~156 Billion

Is Curated

No

Diversity

Webpage

~170 Billion

Yes

22 sources

> 1 Trillion

Yes

380 Programing languages

5 Trillion (600B in public)

No

Webpage

1.2/30 Trillion

Yes

Webpage, Books, Arxiv, Wiki, StackExch

3 Trillion

Yes

Webpage, Books, Wiki, The Stack, STEM

~418 Billion

No

Webpage

~341 Billion

Yes

natural and programming languages

251 Billion

Yes

Web, videos, digitized pdf,synthetic

Languages

English

English

Code

English

English/Multi

English

Multi

Multi

Multi

Composite Datasets

The Pile (2020)

RedPajama-V1* (2022)

DOLMA (2024)

~170 Billion

Observe the increase in token counts for each year

*After extensive deduplication, the number of tokens reduces to 670B. The resulting dataset is called SlimPajama

Data Diversity

Note, however, that the recent RefinedWeb pipeline demonstrates that sourcing a large scale, high quality data from web alone is sufficient to get a better performance[Ref]

Suppose we decided to train a model for 15 million training steps (\(2^{16}\) tokens per training step), resulting in 1 Trillion tokens

Books(10%)

Wikipedia (10%)

OpenWebText* (15%)

RealNews (10%)

Stories (5%)

Codes (10%)

Sampling

Model

C4(40%)

Then we take these \(2^{16}\) tokens by sampling from all the available data sources according to the pre-defined distribution

Utilizing high-quality and diverse datasets is crucial for achieving optimal downstream performance

Books(10%)

Wikipedia (10%)

OpenWebText* (15%)

RealNews (10%)

Stories (5%)

Codes (10%)

Sampling

Model

C4(40%)

*OpenWebText is an open version of WebText

Almost all LLM models predominately used web data (from The Pile, C4, CC, Custom scraping)

WiKi and Books are the next common source

Models like ALPHA CODE uses 100% code data

Models like LaMDA and PALM gave more weightage to conversation data

Some of these models were trained on multilingual datasets, as shown in the next slide

Source: Pre-traininer's Guide

M-L: Multi-Lingual

H: Heuristics

C: Classifiers

Source: Pre-traininer's Guide

Are we reaching the limit on the size of the data?

Could we use LLMs like GPT-4 to generate synthetic data?

Would small (30 B to 100B) but high-quality datasets be ever helpful in building a good model ?

Is mixing code data (non-ambiguous) and text data (ambiguous) helpful or harmful ?

Some Questions to Ponder

Synthetic Dataset

TextBook are all you need

Textbook quality refers to content that has educational value (for teaching purpose)

AT WHICH TRAINING STAGE DOES CODE DATA HELP LLMS REASONING? (ICLR 2024)

References

Gopher: Massive Text

| Data source | Data size | Documents | Tokens | Sampling proportion |

|---|---|---|---|---|

| Massive web | 1.9 TB | 604 M | 506 B | 48% |

| Books | 2.1 TB | 4M | 560 B | 27% |

| C4 | 0.75TB | 361M | 182 B | 10% |

| News | 2.7 TB | 1.1 B | 676 B | 10% |

| GitHub | 3.1 TB | 142 M | 422B | 3% |

| Wikipedia | 0.001 TB | 6 M | 4B | 2% |

Private, used custom scrapper

Number of training tokens: 300 Billion tokens

Diversity of data sources

LLaMA (use only open data)

| Data source | Data size (TB) | Documents | Tokens | Sampling proportion |

|---|---|---|---|---|

| Common Crawl | 3.3 | - | - | 67% |

| C4 | 0.78 | 361 M | 182 B | 15% |

| GitHub | 0.3 | - | - | 4.5% |

| Wikipedia | 0.08 | 6 M | 4B | 4.5% |

| Books | 0.08 | - | - | 4.5% |

| Arxiv | 0.09 | 2.5% | ||

| StackExchange | 0.07 | 2% |

Data Quality Measure

Fix the model and just change the input data source, see how much the performance increase in the down stream tasks.

Information Finetuning

Benchmarking Evaluation Tasks

Benchmarking Evaluation Tasks

Challenges

Test-set leakage

Scaling: Macro vs Micro

Draw cartoon: Dropping stone, An old man got puzzled