Workshop on Transformer based NLP using Hugging Face

Arun Prakash A

-

Conceptual Guide to Transformer Models

- Transformer architecture

- Processing Input and output

- Pre-training objectives for BERT,GPT,BART

- Decoding strategies for GPT models

- Evolution tree, and What is the best setting?

Outline

-

Why hugging face?

- What is the motivation?

- Various Modules

- HF Hub

Note, in this workshop we could briefly cover the core concepts so that one could sail through the documentation of hugging face.

For more details, you may read the lectures by Prof.Mitesh Khapra here ( all lecture recordings will be made available on youtube) or watch lectures by Andrej Karpathy

I assume that

You have completed the Deep learning course

Have a minimal experience in Pytorch or Tensorflow (so that you know the typical training cycle)

This talk covers the core concepts that one should know to use/fine-tune/pre-train language models

Let's get started

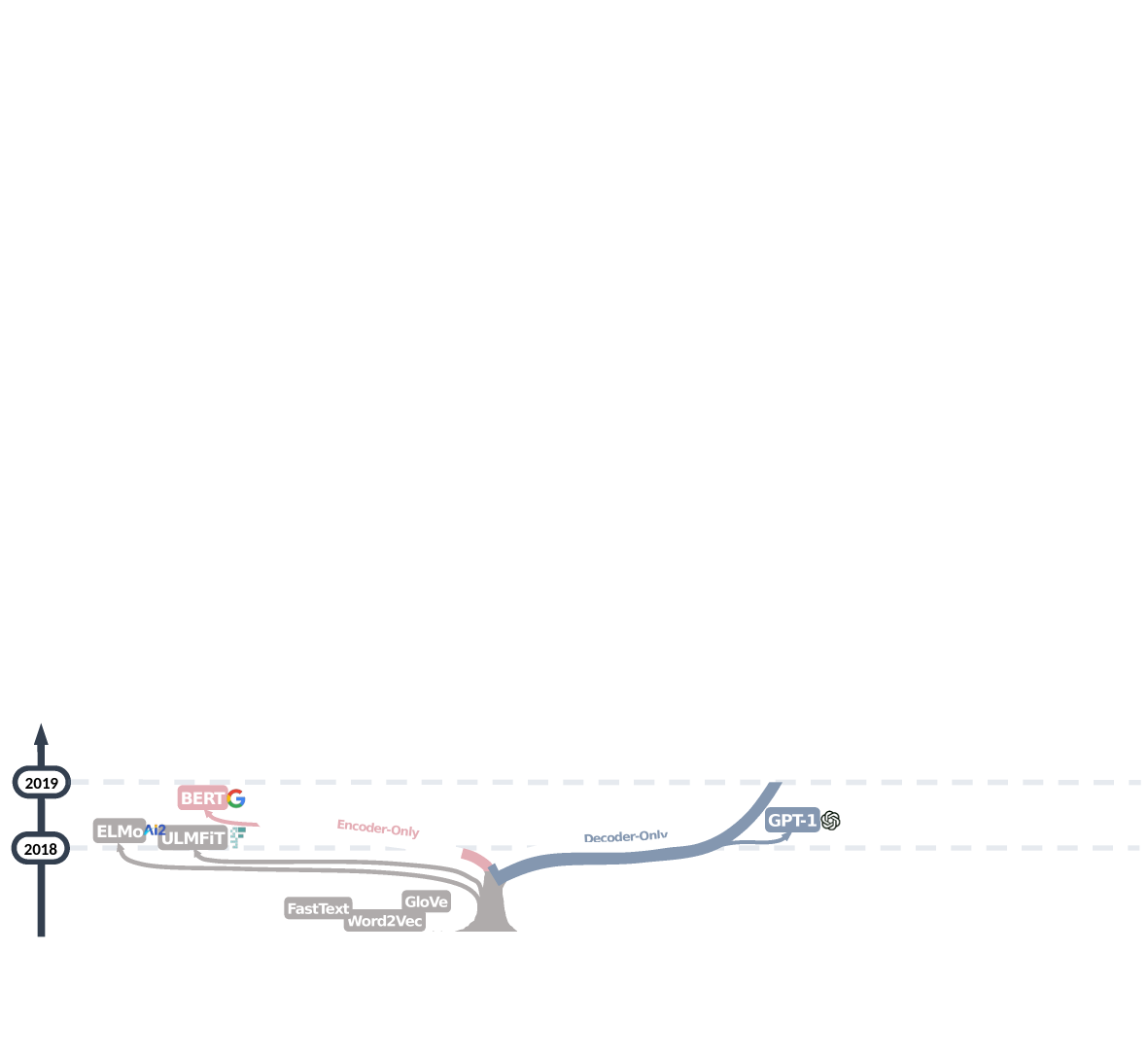

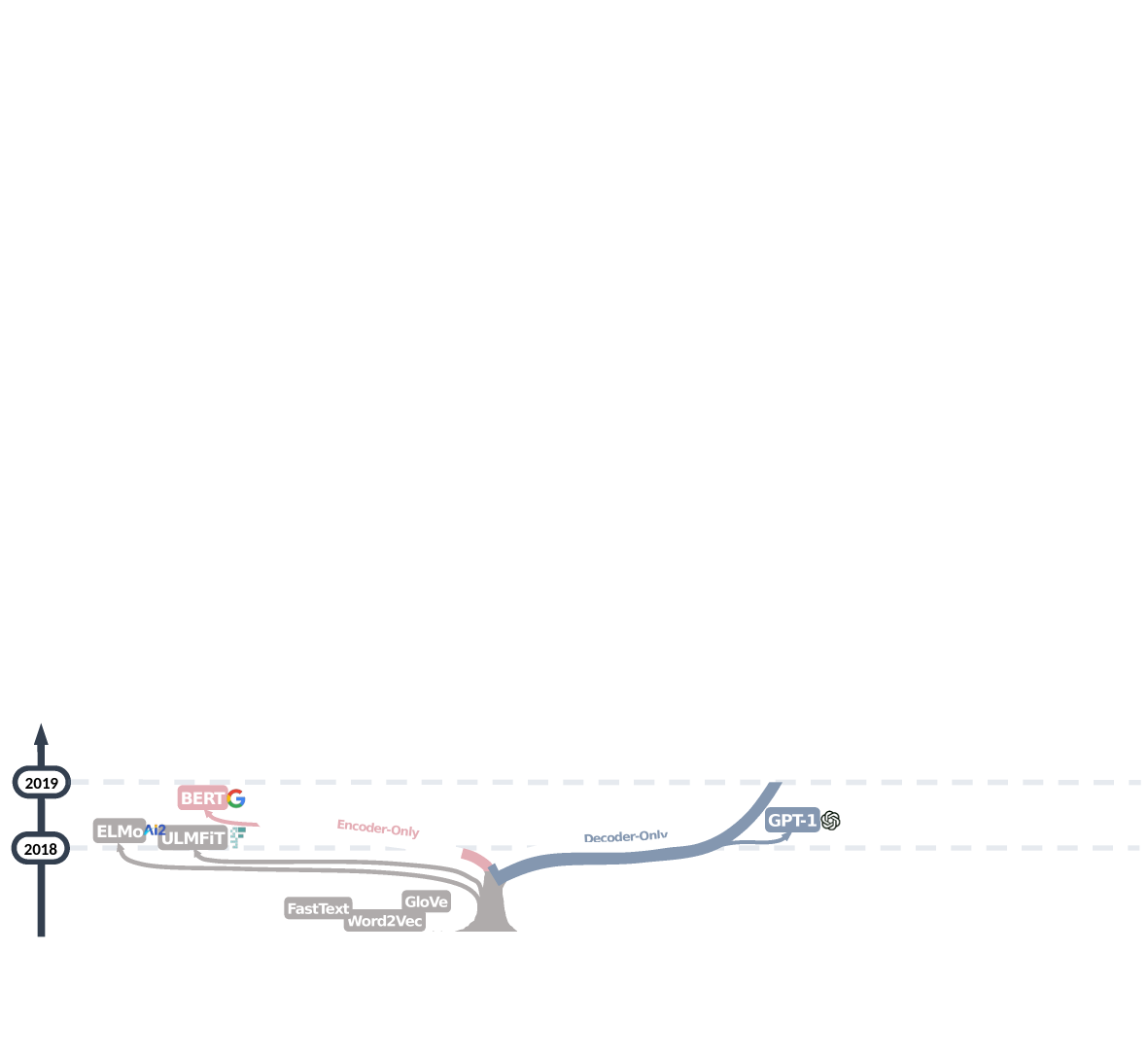

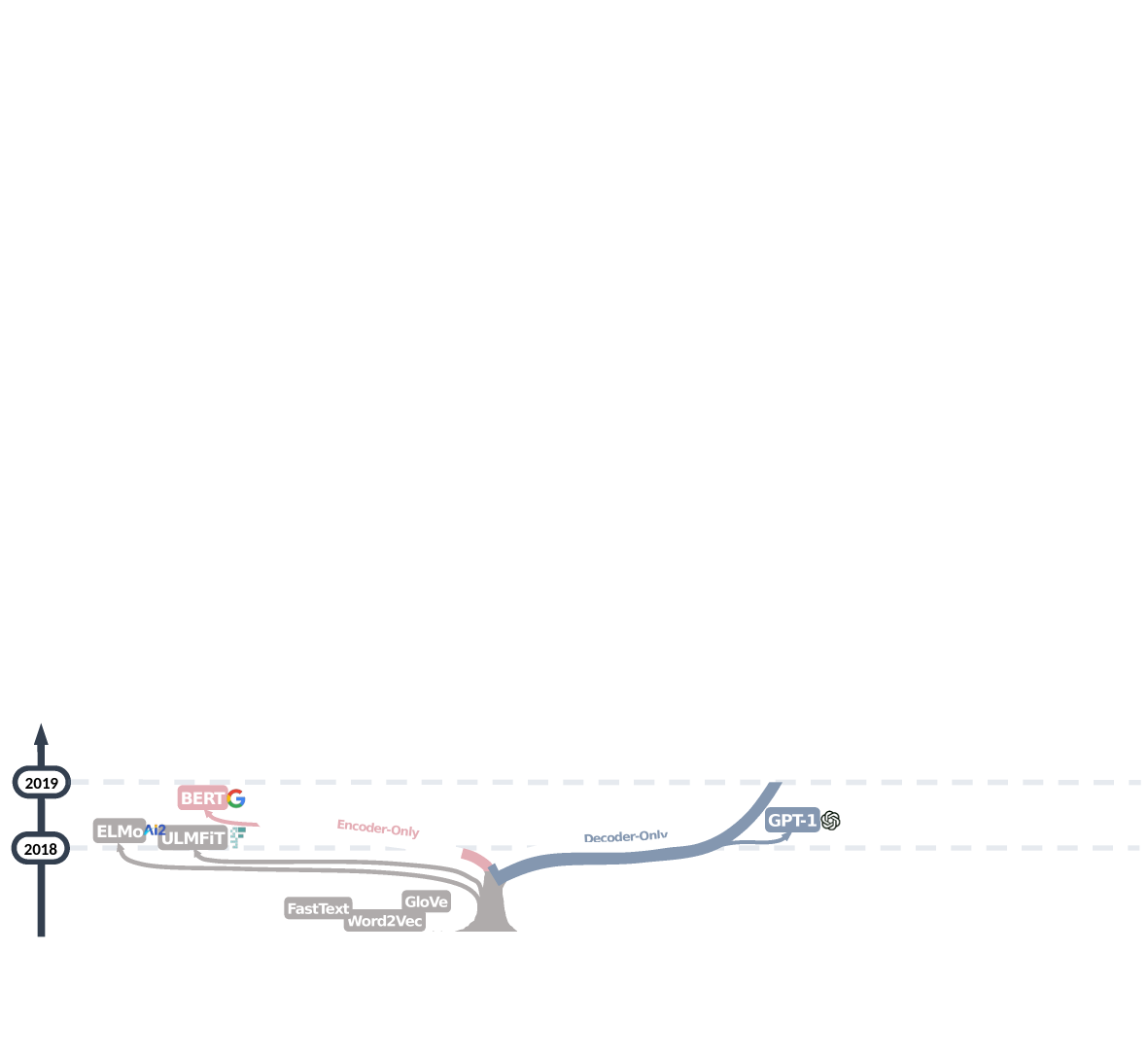

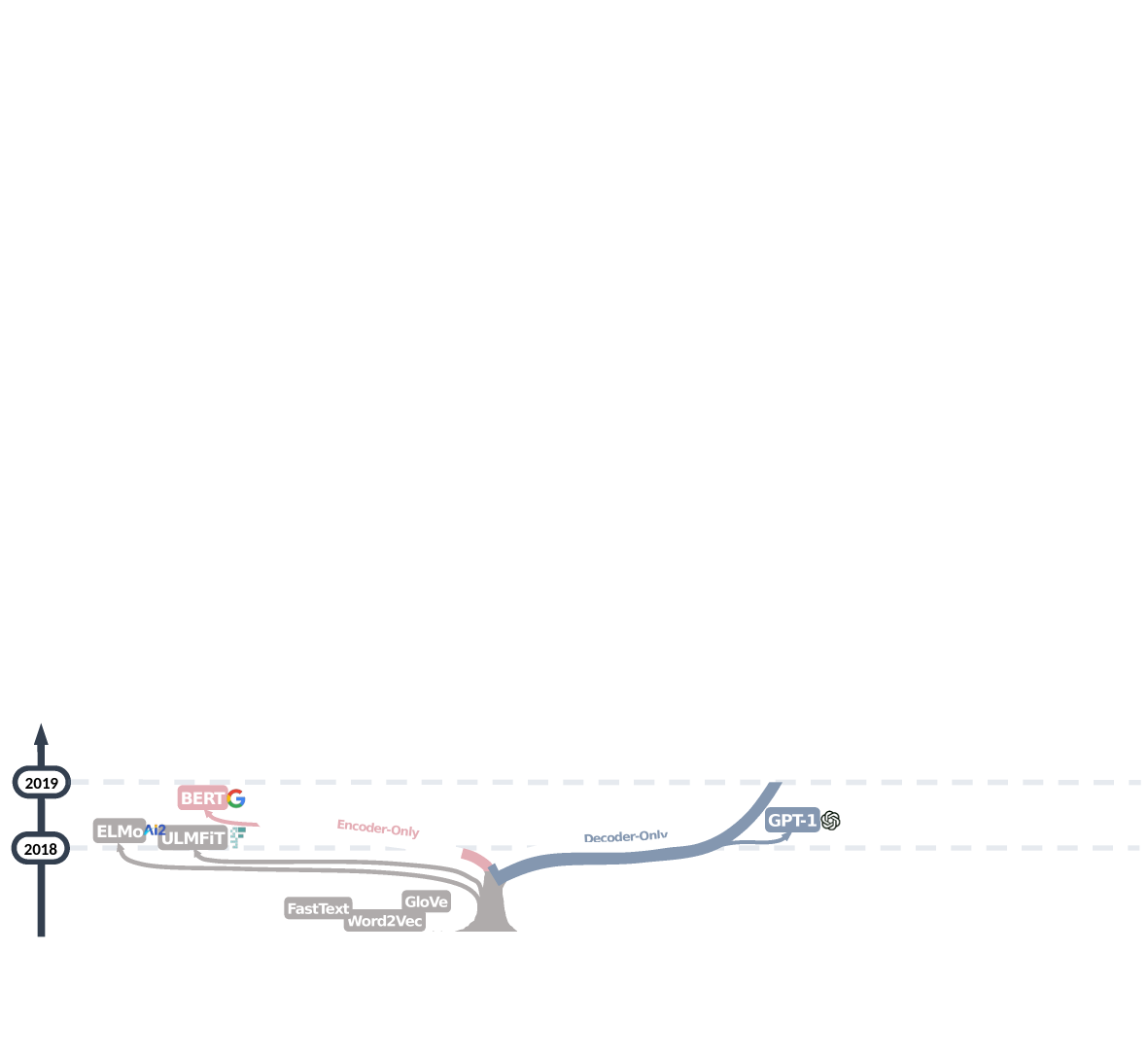

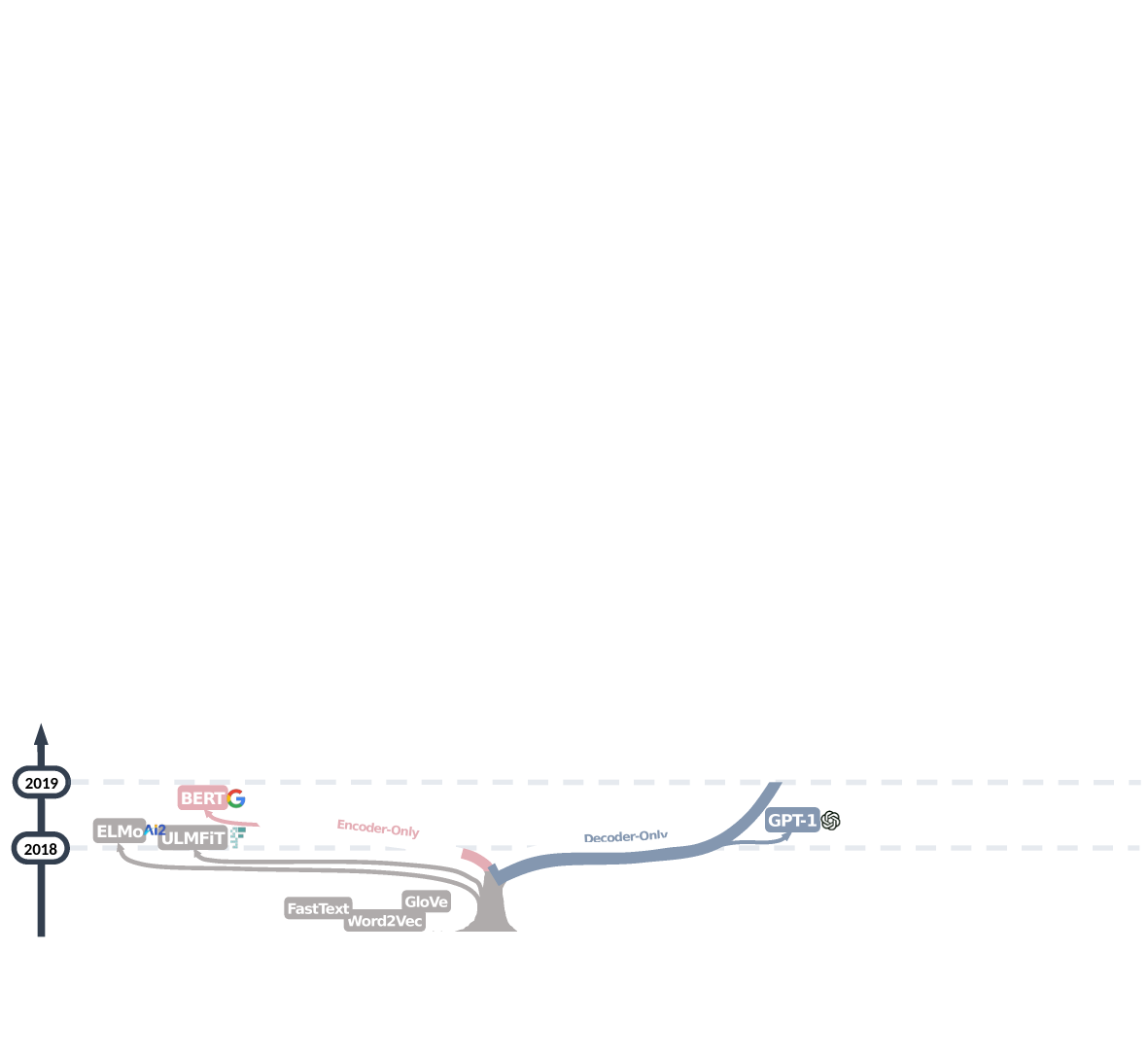

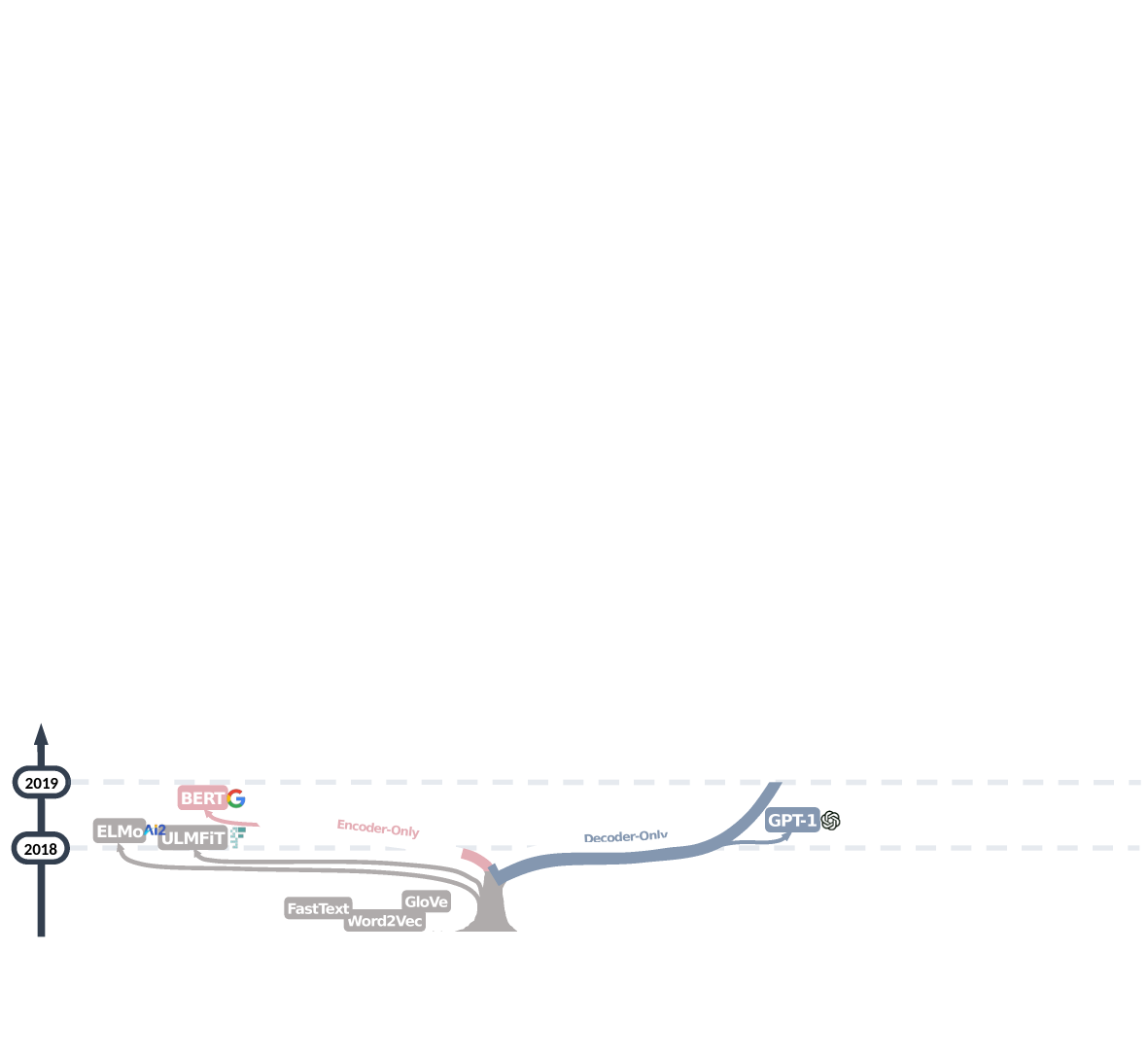

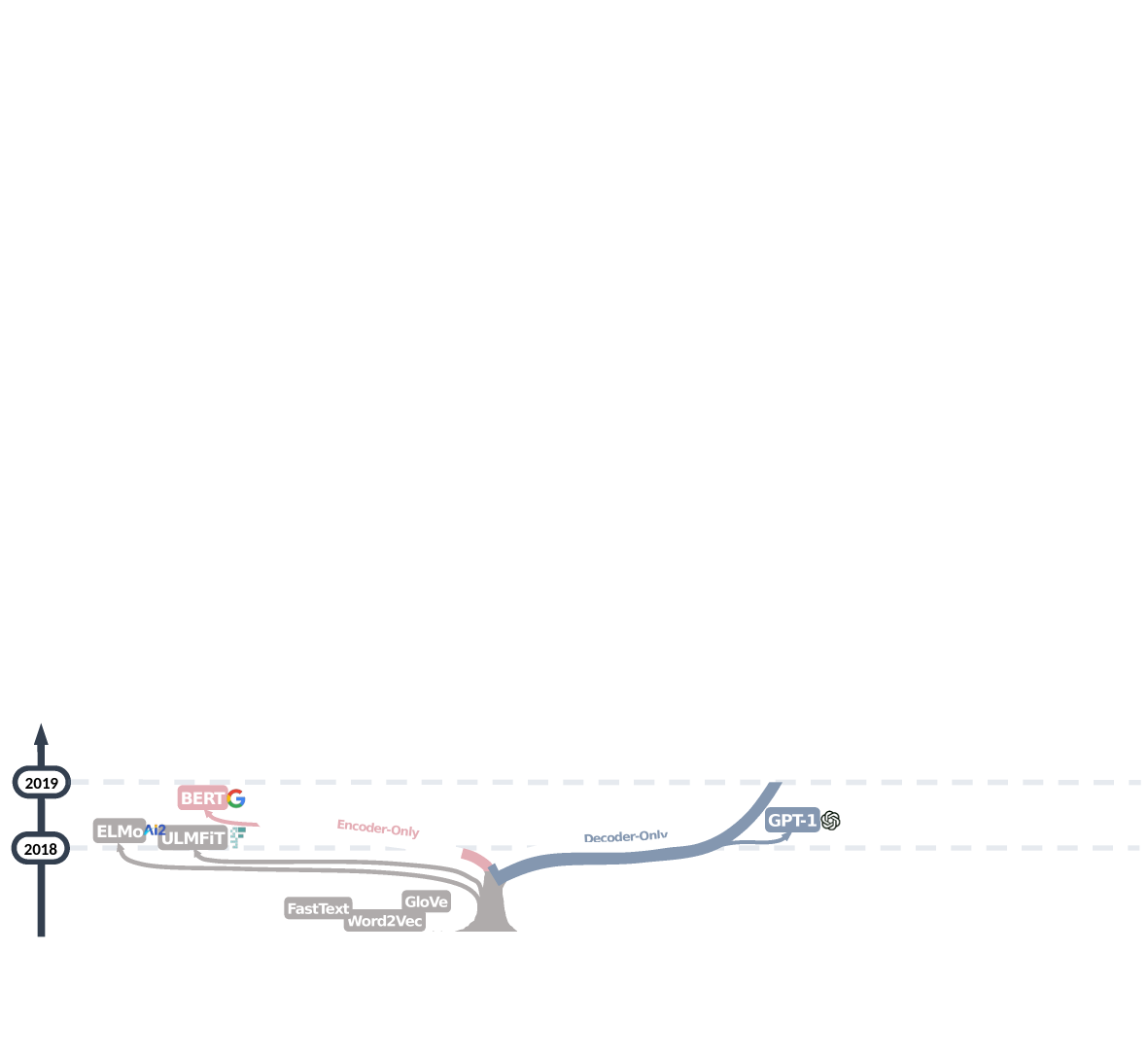

Generations of Language Modelling

1990

2013

Transformers

2017

Statistical LM's

Specific Task Helper

n-gram models

Task-agnostic Feature Learners

word2vec, context modelling

Neural LMs

Transfer Learning for NLP

ELMO, GPT, BERT

(pre-train,fine-tune)

LLMs

2020

General Language Models

GPT-3, InstructGPT

(emerging abilities)

Task solving Capacity

Fruit Fly

Honey Bee

Mouse

Cat

Brain

# Synapses

Transformer

GPT-2

Megatron LM

GPT-3

GShard

?

Scaling Parameters

BookCorpus

Wikipedia

OpenWebText

RealNews

C4, The Pile

ROOTS

Scaling Dataset

Model

Dataset

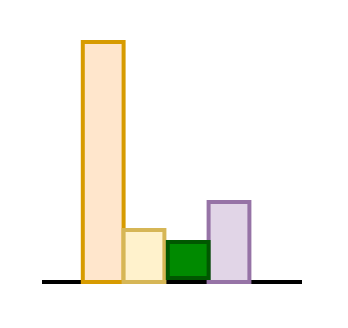

What is the scaling ratio?

Model

Dataset

Kaplan Scaling law, Chinchilla Scaling law

How best can we make use of available compute resource ?

- Train larger model for small time steps

- Train smaller models for larger time steps

Image source: Mooler

Image source: Mooler

Image source: Mooler

Image source: Mooler

Scaling laws

Image source: Mooler

Scaling laws

Image source: Mooler

Scaling laws

GPT-3 with 175 Billion parameters (proprietary)

GPT-J with 6 Billion parameters (open sourced)

Jurassic-1 with 178 Billion parameters (proprietary)

Gopher with 280 Billion parameters (proprietary)

GLaM with 1200 Billion parameters (proprietary)

Instruct GPT proposed RLHF based alignment objective for conversational models

Decoder-only models dominated the field

Meta release OPT with 175 Billion parameters (open sourced)

ChatGPT built on top of the ideas in InstructGPT

Instruct GPT proposed RLHF based alignment objective for conversational models

Decoder-only models dominated the field

Meta release OPT with 175 Billion parameters (open sourced)

ChatGPT built on top of the ideas in InstructGPT

The Transformer

A simple encoder-decoder model with attention mechanism at its core

Takes in a sequence [of words:Tokens:Embeddings]

Outputs a sequence [conditional probabilities: predicted tokens]

Let's first understand the input block

Source Input Block

Target Input Block

Output Block (tied)

Multi-Head Attention

Feed forward NN

Add&Norm

Add&Norm

Multi-Head cross Attention

Feed forward NN

Add&Norm

Add&Norm

Multi-Head Masked Attention

Add&Norm

The Transformer

A simple encoder-decoder model with attention mechanism at its core

Encoder

Decoder

I am reading a book

Naan oru puthagathai padiththu kondirukiren

Naan oru puthagathai padiththu kondirukiren

Source Input Block

Target Input Block

Input Block

Tokenizer

Token Ids

embeddings

I am reading a book

Input Block

Tokenizer

I am reading a book

["i, am, reading, a, book]

Contains:

- Normalizer

- Pre-tokenizer

- tokenization algorithm

Normalizer: Lowercase, (I ->i)

Pre-tokenizer: Whitespace

Tok-algorithm: BPE

-

We have to train the tokenization algorithm using all the samples from a dataset

-

It constructs a vocabulary of size \(|V|\) (Typically, 30000+, 50000+ )

-

Then the tokenizer splits the input sequence into tokens (token could be a whole word or a sub-word)

Input Block

Tokenizer

I am reading a book

["i, am, reading, a, book]

-

Each token is assigned with a unique integer (Id)

-

These IDs are unique to tokenizers used trained on a particular dataset

-

Therefore, we have to use the same tokenizer (used to train a model) for all downstream tasks

Token Ids

["i:2, am:8, reading:75, a:4, book:100]

-

Model-specific special tokens are inserted into the existing list of token_ids, for example

["[BOS]:1, i:2, am:8, reading:75, a:4, book:100,[EOS]:3]

Input Block

I am reading a book

[" [BOS]:1 i:2 am:8 reading:75a:4 book:100 [EOS]:0 ]

Tokenizer

Token Ids

embeddings

-

Embedding is a look-up table that returns a vector of size, say: 512,1024,2048..

-

The token "i" is assigned a vector at index 2 in the embedding table

-

The token "a" is assigned a vector at index 4 in the embedding table

-

This mapping goes on for all the tokens in an input sequence

-

All the embedding vectors are LEARNABLE

Input Block

I am reading a book

[" [BOS]:1 i:2 am:8 reading:75a:4 book:100 [EOS]:0 ]

Tokenizer

Token Ids

embeddings

-

We have another embedding table to encode position of tokens [learnable or fixed]

position embeddings

-

We add these position embeddings to the corresponding token embeddings

-

The number of position embeddings depends on the context (window) length of model

-

THE ENTIRE PROCESS IS REPEATED FOR TARGET INPUT BLOCK

I am reading a book

Input Block

Source Input Block

Embedding for each input token

-

The embedding vectors are randomly initialized

-

Along with these we also pass in attention mask, padding mask for batch processing

Multi-Head Attention

Feed forward NN

Add&Norm

Add&Norm

Multi-Head cross Attention

Feed forward NN

Add&Norm

Add&Norm

Multi-Head Masked Attention

Add&Norm

-

The encoder and decoder of the transformer blocks takes in embedding vectors as input and produces output probability over tokens in the vocabulary

reading

I

am

a

book

Naan

puthakathai

padithtu

kondirukiren

Configuration

One can construct a transformer architecture given the configuration file with the following fields

- \(d_{model}\): model dimension(=embedding dimension)

- \(n_{heads}\):Number of heads

- \(dff\): Hidden dimension (often, \(d_{ff}=4d_{model}\))

- \(n_{layers}\): Number of layers

- \(droput_{prob}\): for Feed Forward, Attention, embedding

- activation function

- tie weights?

The Transformer

Multi-Head Attention

Feed forward NN

Add&Norm

Add&Norm

Multi-Head cross Attention

Feed forward NN

Add&Norm

Add&Norm

Multi-Head Masked Attention

Add&Norm

Originally Proposed for Machine Translation task

The field has evolved rapidly since then in multiple directions

-

Improvements in attention mechanisms, positional encoding techniques and so on

-

Scaling the size of the model (parameters, datasets, training steps)

-

Extensive studies on the choices of hyperparameters

Dataset

Source Input Block

Target Input Block

Architectural Improvements

Multi-Head Attention

Feed forward NN

Add&Norm

Add&Norm

Multi-Head cross Attention

Feed forward NN

Add&Norm

Add&Norm

Multi-Head Masked Attention

Add&Norm

Position Encoding

- Absolute

- Relative

- RoPE,NoPE

- AliBi

Attention

- Full/sparse

- Multi/grouped query

- Flashed

- Paged

Normalization

- Layer norm

- RMS norm

- DeepNorm

Activation

- ReLU

- GeLU

- Swish

- SwiGLU

- GeGLU

- FFN

- MoE

- PostNorm

- Pre-Norm

- Sandwich

Pre-training and Fine-Tuning

Preparing labelled data for each task is laborious and costly

On the other hand,

We have a large amount of unlabelled text easily available on the internet

Transformer

Transformer

Transformer

Input text

Predict the class/sentiment

Input text

Summarize

Question

Answer

Input text

Can we make use of such unlabelled data to train a model?

That is called pre-training.

However, what should be the training objective?

Pre-training Objectives

Encoder

Decoder

Multi-Head Attention

Feed forward NN

Add&Norm

Add&Norm

\(x_1,<mask>,\cdots,x_{T}\)

\(P(x_2=?)\)

Multi-Head masked Attention

Feed forward NN

Add&Norm

Add&Norm

\(x_1,x_2,\cdots,x_{i-1}\)

\(P(x_i)\)

Multi-Head Attention

Feed forward NN

Add&Norm

Add&Norm

Multi-Head cross Attention

Feed forward NN

Add&Norm

Add&Norm

Multi-Head Maksed Attention

Add&Norm

\(x_1,<mask>,\cdots,x_{T}\)

\(<go>\)

\(.,P(x_2|x_1,).,\)

Encoder-Decoder

Objective: MLM

Objective: CLM

Objective: PLM,Denoising

Example: BERT

Example: GPT

Example: BART,T5

Generative Pre-trained model (GPT)

Pre-training Objective: CLM

Transformer Block 1

Transformer Block 2

Transformer Block 3

Transformer Block 4

Transformer Block 5

Assume that we have now (pre)trained a model.

Now, we can fine-tune it on different tasks (with slight modifications in the output head) and put it for inference

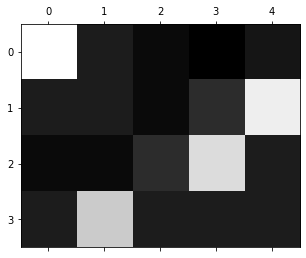

Causal mask allows us to compute all

these probabilities in a single go

Decoding Strategies

Beam Search

Top-P (Nucleus Sampling )

Greedy Search

Top-K sampling

Deterministic

Stochastic

Suitable for translation

Suitable for tasks like Text generation, summarization

BERT: MLM

[mask]

enjoyed

the

[mask]

transformers

Encoder Layer (attention,FFN,Normalization,Residual connection)

Encoder Layer (attention,FFN,Normalization,Residual connection)

Encoder Layer (attention,FFN,Normalization,Residual connection)

A multi-layer bidirectional transformer encoder architecture.

BERT Base model contains 12 layers with 12 Attention heads per layer

The masked words (15%) in an input sequence are sampled uniformly

Of these, 80% are replaced with [mask] token and 10% are replaced with random words and remaining 10% are retained as it is. (Why?)

Is pre-training objective of MLM sufficient for downstream tasks like Question-Answering where interaction between sentences is important?

the special mask token won't be a part of the dataset while adapting for downstream tasks

Now, let's extend the input with a pair of sentences (A,B) and the label that indicates whether the sentence B naturally follows sentence A.

[CLS]

I

enjoyed

the

movie

Feed Forward Network

Self-Attention

transformers

[SEP]

The

visuals

were

amazing

input: Sentence: A

input: Sentence: B

Label: IsNext

Next Sentence Prediction(NSP)

Special tokens: [CLS],[SEP]

[CLS]

I

enjoyed

the

movie

transformers

[SEP]

The

visuals

were

amazing

Position Embeddings

Segment Embeddings

Token Embeddings

Feed Forward Network

Self-Attention

Encoder Layer

Encoder Layer

Encoder Layer

Encoder Layer

[CLS]

[mask]

enjoyed

the

[mask]

transformers

[SEP]

The

[mask]

were

amazing

Minimize the objective:

What is the best setting?

encoder

encoder

decoder

decoder

Scale:

- small

- medium

- large

Objective:MLM

- corruption rate

- token deletion

- span mask

Scale:

- small

- medium

- large

Scale:

- small

- medium

- large

pre-Training

pre-Training

pre-Training

Pretraining:

- Wiki

- Books

- Web Crawl

Fine-Tuning

Fine-Tuning

Fine-Tuning

FineTuning:

- GLUE

- SQUAD

- SuperGLUE

- WMT-14

- WMT-15

- WMT-16

- CNN/DM

hyp-params:

- num.of train steps

- learning rate scheme

- optimizer

hyp-params:

- num.of train steps

- learning rate scheme

- optimizer

hyp-params:

- num.of train steps

- learning rate scheme

- optimizer

Objective:

- de-noising

- corrpution rate

- continous masking

FineTuning:

- GLUE

- SQUAD

- SuperGLUE

- WMT-14

- WMT-15

- WMT-16

- CNN/DM

Objective:

- CLM

- prefix-LM

- conditional

FineTuning:

- GLUE

- SQUAD

- SuperGLUE

- WMT-14

- WMT-15

- WMT-16

- CNN/DM

Language Models are Few Shot Learners, conditional learners

Text to text framework

Instruction fine-tuning

Aligning with user intent

Retrieval Augmented Generation

Agents

Hugging Face Is Here to Help us

Applying a novel machine learning architecture to a new task can be a complex undertaking, and usually involves the following steps:*

-

Implement the model architecture in code, typically based on PyTorch or TensorFlow.

-

Load the pretrained weights (if available) from a server

-

Preprocess the inputs, pass them through the model, and apply some task specific post-processing

-

Implement data loaders and define loss functions and optimizers to train the model.

Each of these steps requires custom logic for each model and task.

Why Hugging face?

All points are taken from the book NLP with Transformers by Lewis Tunstall

Each of these steps requires custom logic for each model and task.

Why Hugging face?

when research groups publish a new article, they will also release the code along with the model weights. However, this code is rarely standardized and often requires days of engineering to adapt to new use cases.

That's where HF transformers come to rescue

All points are taken from the book NLP with Transformers by Lewis Tunstall

Core: tensors

JIT

nn

Optim

multiprocessing

quantization

sparse

ONNX

Distributed

fast.ai

Detectron 2

Horovod

Flair

AllenNLP

torch.vision

BoTorch

GloW

Lightening

Skorch

Transformers

Datasets

evaluate

trainer

accelerate

GradIO

Inference EndPoints

PEFT

Transformers has layered APIs that allows us to interact at various levels of abstractions

With the Transformers, Tokenizers, and Datasets libraries, we have everything we need to train our very own transformer models!

We have a lot more modules such as Trainers, evaluate and modules for images and audio processing..

We will get into details of the core modules in the notebooks

Rich Ecosystem